Memory management is one of those things in .NET development that easily gets overlooked until things start slowing down or crashing unexpectedly. Most of the time, it’s not because the code is broken, it’s because memory just isn’t being released the way it should. These kinds of memory leaks can build up quietly and cause serious damage before anyone even notices they’re there. It’s like a small leak under your sink. Ignoring it for weeks? Eventually, you’ll deal with more than just water damage.

Working on .NET projects, especially across multiple teams or timelines, can make handling memory even trickier. This is where .NET project outsourcing really shows its value. Outsourced teams who specialize in .NET know how to spot patterns that lead to memory trouble and build better habits around resource cleanup from the start. Addressing these issues early means faster, more stable software down the road and fewer surprises right before a big release.

Understanding Memory Leaks In .NET

A memory leak happens when your application keeps using memory it no longer needs. In .NET, this usually means objects are being kept alive longer than they should, often without you realizing it. The garbage collector in .NET is smart, but it can’t clean up after something if there’s still a reference pointing to it. That’s what turns a normal chunk of memory into a memory leak.

Here are a few common ways memory leaks show up in .NET applications:

– Objects stored in static fields stay in memory for the life of your app. If you accidentally reference large objects here, they never leave.

– Event handlers in .NET often cause leaks when you forget to detach them. For example, if a child object subscribes to an event in a parent but never unsubscribes, the garbage collector can’t remove it.

– Caches and dictionaries can grow quietly unless you manage them carefully. Keeping references around longer than needed, especially to complex data types, piles up memory usage fast.

– Asynchronous methods or Tasks, when misused, can also lock memory. It’s easy to forget that awaiting something does not eliminate its memory impact.

You might not see problems right away. Leaks happen slowly. Your app might run perfectly for days or weeks, then start lagging, freezing, or crashing. One example we saw involved a customer feedback tool inside a .NET desktop app. It stored user interactions in a static list without a size check. Within a few hours, the app became noticeably slower. Users thought it was their internet. It wasn’t.

Memory leaks sneak in during code updates, library upgrades, and even when trying to optimize other parts of your app. That’s why recognizing them matters, and knowing how to find them matters even more.

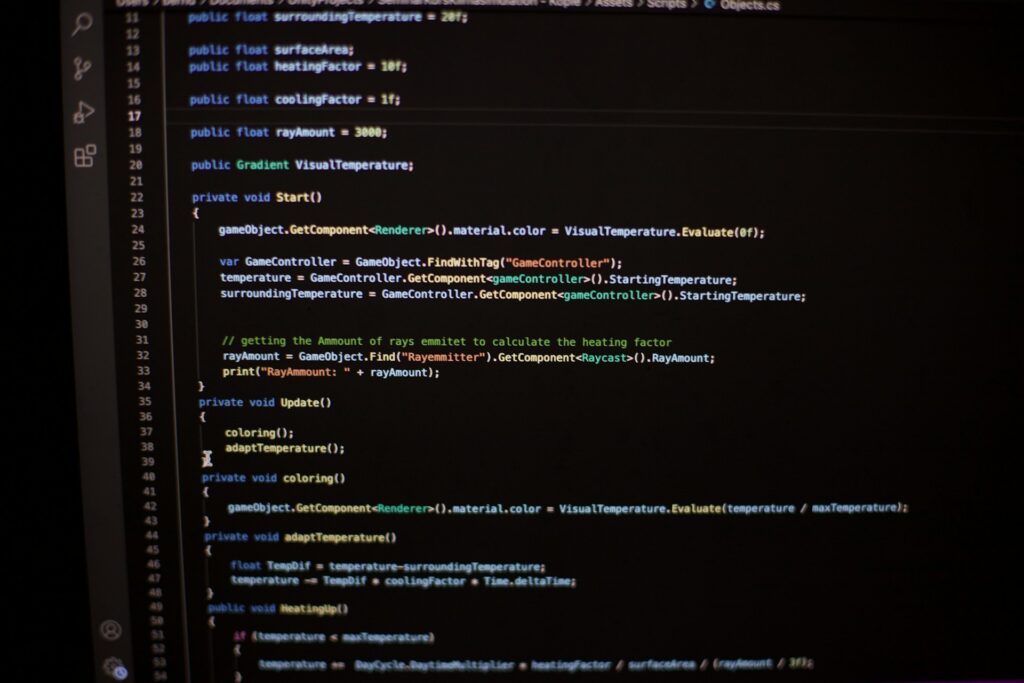

Tools And Techniques For Detecting Memory Leaks

Spotting a memory leak in a .NET application doesn’t have to be guesswork. With the right tools, you can pinpoint the problem before it impacts performance. These tools look at memory usage patterns, object retention paths, and which objects are lingering longer than expected.

Here are some reliable tools that help with memory leak detection:

1. Visual Studio Diagnostic Tools – Built into Visual Studio, this tool lets you take memory snapshots and compare them. You can see objects retained in memory and drill into instances to find out what’s keeping them alive.

2. .NET Memory Profiler – This precise tool creates detailed memory usage reports that show reference chains. If something’s causing an object to stick around, it will point right to it.

3. dotMemory – Helps visualize memory traffic inside your .NET app and filter data by class, namespace, or object habitat. Ideal for medium to large applications.

To effectively use these tools:

– Start by creating a baseline snapshot of your app’s memory after a fresh start.

– Interact with your app like a user would. Click buttons, load pages, and trigger normal workflows.

– Take another snapshot after these actions. Then compare both. Look for objects with increasing counts, especially those that shouldn’t be growing.

– Use reference tracking to find out what’s still holding onto each object.

Some tips when profiling memory:

– Run tests on clean environments to avoid unrelated memory noise.

– Automate memory snapshots into your QA process for consistent tracking.

– Focus on frequently used features first. They give you the most accurate info.

Detecting leaks isn’t about finding one broken part. It’s about identifying where your app stops releasing memory and why. Once you’ve narrowed that down, fixing it becomes much easier. Don’t wait until users report performance issues. Catch it early and keep things running smooth.

Strategies For Fixing Memory Leaks

Once memory leaks are spotted, patching them takes a focused, step-by-step approach. These fixes don’t always mean rewriting major parts of your codebase. In many cases, small changes in how objects are created and released make a big difference.

Here are some practical ways to address memory leaks in .NET:

1. Dispose Objects Properly. Whenever your application uses unmanaged resources like file handles or database connections, make sure those objects are disposed after use. Use using blocks or explicitly call the Dispose method to free up resources promptly.

2. Use Weak References When Appropriate. If you’re caching objects or listening for events, sometimes it makes sense to use weak references. These allow the garbage collector to clean up objects even if something still has a reference, as long as it’s marked weak.

3. Clear Event Handlers. One major cause of leaks in .NET applications is failing to remove event handlers. Once an object no longer needs to listen to an event, unsubscribe to release the reference. It’s easy to forget this, especially with shared components or long-lived classes.

4. Trim Collections. Lists and dictionaries can grow fast. If you’re using them to store or buffer data, make sure to prune items that are no longer needed. Even better, use bounded versions that clean themselves up over time.

5. Review Singleton Usage. Lots of developers rely on static objects to store configuration or utility methods. That’s fine until those objects start holding onto other large objects. Review your singletons and static instances to make sure they’re not becoming hidden memory hogs.

One .NET API we worked with began experiencing slower response times after its third release. Profiling showed a handful of user analytics objects stored in a cache without any kind of expiration logic. The fix was simple. We swapped the existing cache for one that supports eviction rules and added logic to remove old records after 30 minutes. The slowdown disappeared instantly.

Teams that gain experience spotting issues like these can resolve cracks in their system before they grow into deeper problems. What seems like a minor object reference can quickly grow into a performance blocker if it’s repeated across multiple parts of the application.

Preventing Future Memory Leaks

Fixing a memory leak is one thing. Structuring your .NET projects to avoid leaks in the first place requires a different level of discipline. It comes from building consistent habits in the codebase and sticking to them. Leaks often surface after repeated updates, reused components, or missed steps in handoffs across teams.

Here are a few habits to build into your workflow:

– Set a process for regular code reviews. Fresh eyes can spot issues, especially related to lifecycle management. Flag suspicious object holding patterns and lengthy chains of static references.

– Test in conditions that simulate real usage. Load test your app with increasing data sizes and user sessions. This tends to bring memory-related issues out of hiding.

– Use static analysis tools in your build process. Several IDE plugins or CI tools offer insights into object holding patterns, unused references, and places where objects are getting pinned too long.

– Rotate ownership of modules. When developers switch across parts of the app, they bring new perspectives to how memory is being handled. It also helps uncover logic that might otherwise go unnoticed.

One simple but often skipped step is creating lightweight cleanup checklists. These keep everyone on the same page when cleaning up during feature updates or refactors. Encourage your team to question which instances need to live permanently and which don’t.

A nearshore .NET project outsourcing team can help standardize these practices across codebases. Having external experts who regularly look for long-term issues improves internal reliability and brings a more objective view of how memory gets handled across features or releases.

Keeping Your .NET Applications Running Smoothly

Fixing memory leaks isn’t just about quality control. It’s about keeping your software predictable across releases and maintaining trust with your users. When software becomes sluggish or unstable, it reflects directly on your product’s value.

By learning where memory issues come from, spotting them early using the right tools, and applying practical routines to prevent them, teams can keep applications strong even as they grow in scope. These routines don’t take much once they’re part of your workflow.

If you’re curious about how we’ve helped development teams stay on top of memory management, check out some of the real-world projects we’ve worked on here: https://portfolio.netforemost.com/

If you’re ready to improve memory handling in your applications and want support from a team that knows how to prevent long-term performance issues, working with experts in .NET project outsourcing can make a big difference. Trust NetForemost to help you build reliable solutions that run clean and stay fast over time.